Function approximation by the finite element method

MATMEK-4270

Short recap

We have considered the approximation of functions \(u(x), x \in \Omega =[a, b]\) using \(u(x) \approx u_N(x)\) and

\[ u_N(x) = \sum_{i=0}^N \hat{u}_i \psi_i(x) \]

- \(\psi_i(x)\) have been global basis functions, defined on all of \(\Omega = [a, b]\)

- \(\{\hat{u}_i\}_{i=0}^N\) are the unknowns

We have found \(\{\hat{u}_i\}_{i=0}^N\) using

- The least squares method (variational)

- The Galerkin method (variational)

- The Collocation method (interpolation)

Advantages and disadvantages of the global variational methods

Advantages

- Spectral accuracy

- Efficient for orthogonal basis functions

- No mesh

Disadvantages

- Mainly feasible for simple domains, like lines and rectangles

- Inefficient for non-orthogonal basis functions

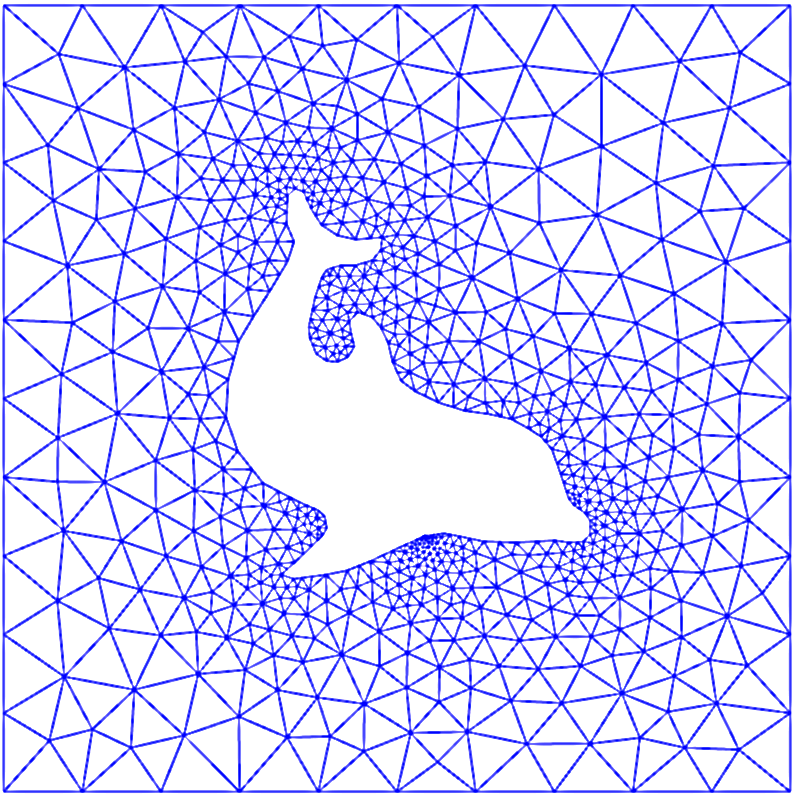

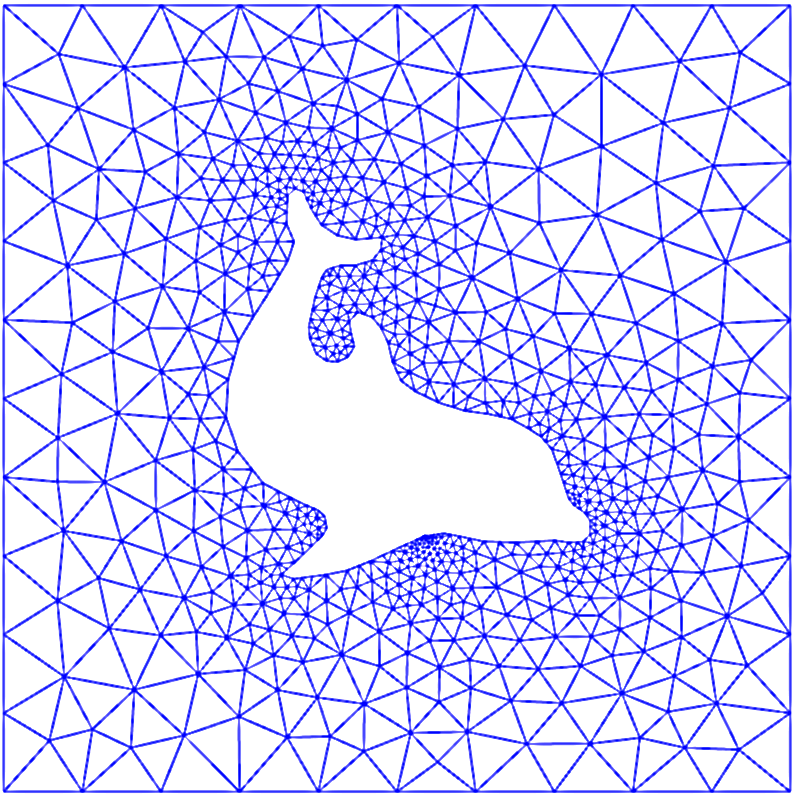

Impossible to use for unstructured meshes, like

The finite element method is a variational method using local basis functions

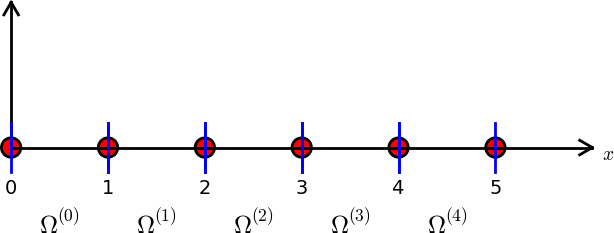

5 global basis functions

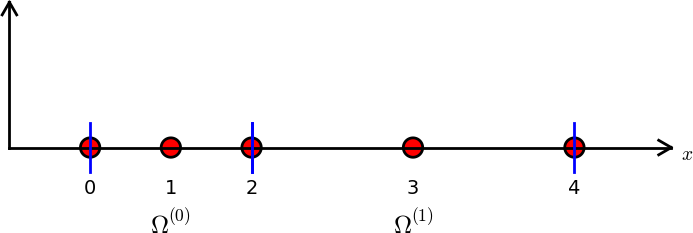

2 local piecewise linear basis functions

The Galerkin formulation is the same whether you use a global approach with Legendre polynomials or a local FEM with piecewise linear polynomials. The difference lies all in the function spaces and the choice of basis.

Find \(u_N \in V_N (=\text{span}\{\psi_j\}_{j=0}^N)\) such that

\[ (u-u_N, v) = 0\quad \forall \, v \in V_N \]

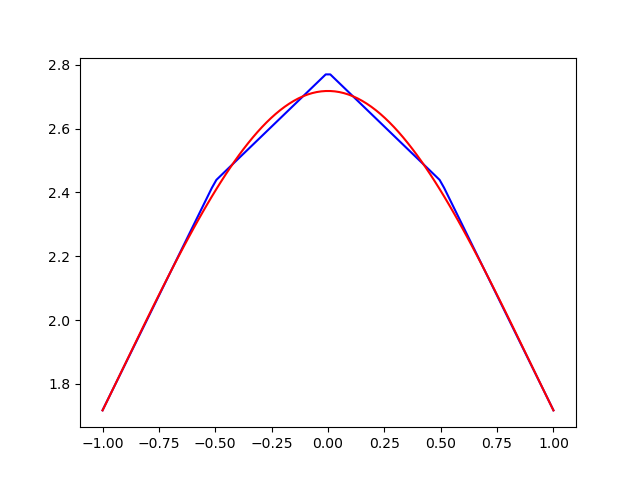

Piecewise linear basis functions lead to piecewise linear approximations \(\small u_N(x)\)

- With FEM \(u_N(x)\) is defined everywhere in the domain \(\Omega\) and not just in mesh points.

- Interpolation is not needed since \(u_N(x) = \sum_{j=0}^N\hat{u}_j \psi_j(x), \quad x \in \Omega\).

The finite element method is especially well suited for unstructured meshes in complex geometries

Structured mesh

Unstructured mesh

But in this course we will learn FEM using simple structured meshes.

The finite element mesh

The domain \(\Omega\) is divided into \(N_e\) smaller, non-overlapping, subdomains \(\Omega^{(e)}\), such that

\[ \Omega = \bigcup_{e=0}^{N_e-1} \Omega^{(e)} \]

- The smaller subdomains between the blue lines are referred to as elements.

- The red dots are referred to as nodes, just like for interpolation methods.

There may be many nodes inside each element

The figure shows a mesh with 5 non-uniform nodes and 2 non-uniform elements

Using more nodes inside each element is how the FEM can achieve higher order accuracy

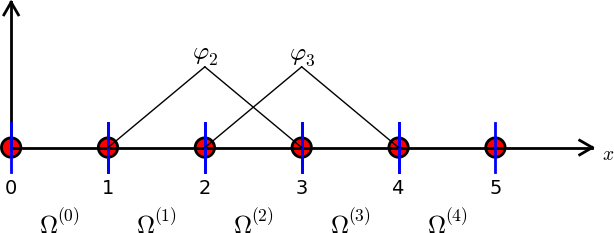

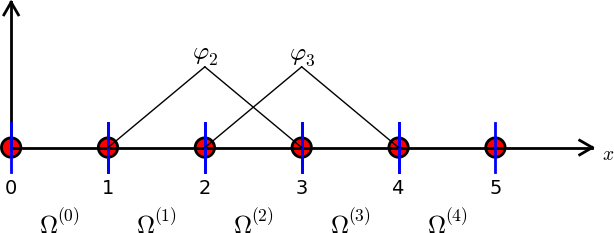

Finite element basis functions

An element with no internal nodes can at best use piecewise linear basis functions

\[ \psi_j(x) = \begin{cases} \frac{x-x_{j-1}}{x_{j}-x_{j-1}} \quad &x \in [x_{j-1}, x_{j}] = \Omega^{(j-1)},\\ \frac{x-x_{j+1}}{x_{j}-x_{j+1}} \quad &x \in [x_{j}, x_{j+1}] = \Omega^{(j)},\\ 0 \quad &\text{otherwise}, \end{cases} \]

The FEM is a variational method

Use a continuous piecewise linear function space \(V_N=\text{span}\{\psi_j\}_{j=0}^N\), where

\[ \psi_j(x) = \begin{cases} \frac{x-x_{j-1}}{x_{j}-x_{j-1}} \quad &x \in [x_{j-1}, x_{j}] = \Omega^{(j-1)}\\ \frac{x-x_{j+1}}{x_{j}-x_{j+1}} \quad &x \in [x_{j}, x_{j+1}] = \Omega^{(j)}\\ 0 \quad &\text{otherwise} \end{cases} \]

To approximate a function \(u(x), x \in \Omega = [a, b]\), we can now use the variational Galerkin method: Find \(u_N \in V_N\) such that

\[ (u-u_N, v) = 0 \quad \forall \, v \in V_N \]

We can still use \(v=\psi_i\) and \(u_N(x) = \sum_{j=0}^N \hat{u}_j \psi_j(x)\), exactly like for the global Galerkin method and obtain:

\[ \sum_{j=0}^N (\psi_j, \psi_i) \hat{u}_j = (u, \psi_i), \quad i=0,1,\ldots, N \]

The element mass matrix

The mass matrix \(A = (a_{ij})_{i,j=0}^N\) is

\[ a_{ij} = (\psi_j, \psi_i) = \int_{\Omega}\psi_j \psi_i dx, \quad (i, j) \in (0, \ldots, N)^2 \]

However, since each basis function is only non-zero on at most two elements, we usually assemble elementwise and add up (this works very well on unstructured meshes!)

\[ a_{ij} = \sum_{e=0}^{N_e-1} {a}_{ij}^{(e)} = \sum_{e=0}^{N_e-1} \int_{\Omega^{(e)}} \psi_j \psi_i dx \]

We define the element mass matrix \(A^{(e)} = ({a}^{(e)}_{ij})_{i,j=0}^N\) as

\[ {a}^{(e)}_{ij} = \int_{\Omega^{(e)}} \psi_j \psi_i dx, \quad (i, j) \in (0, \ldots, N)^2 \]

The finite element method is much more difficult to implement than global methods, because of the local basis functions and unstructured mesh. Yet, the unstructured mesh and local basis functions make the method much more flexible.

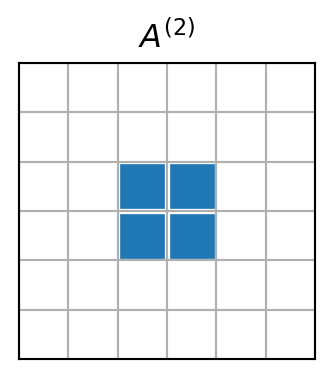

The element mass matrix is highly sparse

\[ {a}^{(e)}_{ij} = \int_{\Omega^{(e)}} \psi_j \psi_i dx, \quad \]

For piecewise linear basis functions there are only 2 non-zero basis functions per element. See element \(\Omega^{(2)}\)

The matrix \({A}^{(2)}\) will have only 4 non-zero items. So it is really a waste of memory using an \((N+1)\times (N+1)\) matrix.

Define a local-to-global map \(q(e, r)\)

\[ q(e, r) = de+r \]

Mapping local index \(r \in (0, \ldots, d)\) on global element \(e\) to the global index \(q(e, r) \in (0, 1, \ldots, N)\). There are \(d+1\) nodes per element.

For unstructured meshed \(q(e, r)\) needs to be stored explicitly (\(r\) numbering is implicit):

\[ q = \Big \{ \begin{matrix} 0: \begin{bmatrix} 0 \\ 1 \\ 2 \end{bmatrix} & 1: \begin{bmatrix} 2 \\ 3 \\ 4 \end{bmatrix} & 2: \begin{bmatrix} 4 \\ 5 \\ 6 \end{bmatrix} \end{matrix} \Big \} \]

Use a local dense element mass matrix

With \(d+1\) nonzero basis functions on element \(e\) all the non-zero items of \(A^{(e)}\) can be stored in the dense matrix:

\[ \tilde{A}^{(e)} = (\tilde{a}_{rs}^{(e)})_{r,s=0}^d \]

\[ \tilde{a}_{rs}^{(e)} = \int_{\Omega^{(e)}} \psi_{q(e,r)} \psi_{q(e,s)} dx = a^{(e)}_{q(e, r), q(e, s)} \]

Note

The matrix \(\tilde{A}^{(e)}\) contains the same nonzero items as \(A^{(e)}\), but \(\tilde{A}^{(e)}\in \mathbb{R}^{(d+1) \times (d+1)}\) is dense, whereas \(A^{(e)} \in \mathbb{R}^{(N+1)\times (N+1)}\) is highly sparse.

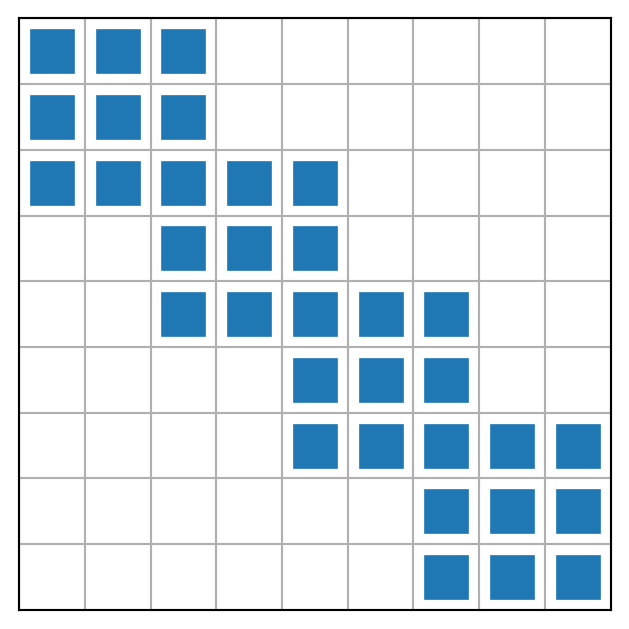

Local to global mapping in assembly of \(A\)

The 4 smaller matrices represent \(\tilde{A}^{(0)}, \tilde{A}^{(1)}, \tilde{A}^{(2)}\) and \(\tilde{A}^{(3)}\)

Finite element assembly: add up for \(e=0,1,\ldots, N_e-1\) and \((r,s) \in (0,1,\ldots, d)^2\)

\[ \quad a_{q(e,r),q(e,s)} \mathrel{+}= \tilde{a}^{(e)}_{r,s} \]

Mapping to reference domain

In assembling the matrix \(A\) we need to compute the element matrix \(\tilde{A}^{(e)}\) many times. Is this really necessary? The integrals

\[ \int_{\Omega^{(e)}} \psi_{q(e,r)} \psi_{q(e,s)} d\Omega, \]

differ only in the domain, whereas the shape of the basis functions is the same regardless of domain. The piecewise linear basis functions are always straight lines.

Let us map all elements to a reference domain \(\Omega^r = [-1, 1]\). The affine map from \(x \in \Omega^{(e)} = [x_{q(e,0)}, x_{q(e, d)}] = [x_L, x_R]\) to \(X \in \Omega^r\) can be written for any element as

\[ x = \frac{1}{2}(x_L+x_R) + \frac{1}{2}(x_R-x_L)X \]

Mapping back and forth is as usual

\[ X(x) \quad \text{or} \quad x(X) \]

Mapping finite element basis functions

The basis functions \(\psi_{q(e,r)}(x)\) are commonly mapped to the Lagrangian basis functions

\[ \psi_{q(e,r)}(x) = \ell_r(X) = \prod_{\substack{0 \le s \le d \\ s \ne r}} \frac{X-X_s}{X_r-X_s} \]

where

\[ X_r = -1 + \frac{2r}{d}, \quad r = 0, 1, \ldots, d \]

and for piecewise linear basis functions (\(d=1\)) we get the following basis functions on the reference domain:

\[ \ell_0(X) = \frac{1}{2}(1-X) \quad \text{and} \quad \ell_1(X) = \frac{1}{2}(1+X) \]

Quadratic elements (\(d=2\))

For quadratic elements the Lagrange basis functions on the reference domain are

\[ (X_0, X_1, X_2) = (-1, 0, 1) \]

\[ \ell_0(X) = \frac{1}{2}X(1-X), \quad \ell_1(X) = (1-X^2), \quad \ell_2(X) = \frac{1}{2}X(1+X) \]

d=3 and \(\small (X_0, X_1, X_2, X_3) = (-1, -1/3, 1/3, 1)\)

\[ \begin{matrix} \ell_0(X) = -\frac{9}{16}(X-1)(X-\tfrac{1}{3})(X+\tfrac{1}{3}) & \ell_1(X) = \frac{27}{16}(X-1)(X-\tfrac{1}{3})(X+{1}) \\ \ell_2(X) = -\frac{27}{16}(X-1)(X+\tfrac{1}{3})(X+1) & \ell_3(X) = \frac{9}{16}(X-\tfrac{1}{3})(X+\tfrac{1}{3})(X+1) \end{matrix} \]

Back to the element matrix

Use a change of variables (\(x\rightarrow X\) and \(\psi_{q(e,r)}(x)=\ell_r(X)\)) for the inner product:

\[ \begin{align*} \tilde{a}^{(e)}_{rs} &= \int_{\Omega^{(e)}} \psi_{q(e,r)}(x) \psi_{q(e,s)}(x) d\Omega, \\ &= \int_{x_L}^{x_R} \psi_{q(e,r)}(x) \psi_{q(e,s)}(x) dx, \\ &= \int_{-1}^1 \ell_{r}(X) \ell_{s}(X) \frac{dx}{dX} dX, \end{align*} \]

where \(dx/dX = h(e)/2\) and \(h(e)=x_{q(e, d)}-x_{q(e, 0)}=x_R-x_L\), such that for any element, regardless of order \(d\), we can compute the elements of the element matrix as

\[ \tilde{a}_{rs}^{(e)} = \frac{h(e)}{2}\int_{-1}^1 \ell_{r}(X) \ell_{s}(X) dX \]

Note that the integral does not depend on element number \(e\)!

Since the integral does not depend on the element

then, instead of computing for each (linear) element:

\[ \tilde{A}^{(e)} = \begin{bmatrix} \int_{\Omega^{(e)}} \psi_{q(e, 0)} \psi_{q(e, 0)} dx & \int_{\Omega^{(e)}} \psi_{q(e, 0)} \psi_{q(e, 1)} dx \\ \int_{\Omega^{(e)}} \psi_{q(e, 1)} \psi_{q(e, 0)} dx & \int_{\Omega^{(e)}} \psi_{q(e, 1)} \psi_{q(e, 1)} dx \end{bmatrix}, \]

we can simply use:

\[ \tilde{A}^{(e)} = \frac{h(e)}{2}\begin{bmatrix} \int_{-1}^1 \ell_{0} \ell_{0} dX & \int_{-1}^1 \ell_{0} \ell_{1} dX \\ \int_{-1}^1 \ell_{1} \ell_{0} dX & \int_{-1}^1 \ell_{1} \ell_{1} dX \end{bmatrix}. \]

with merely a different \(h(e)\) for each element.

Similarly for higher \(d\).

Sympy implementation element mass matrix

Linear (\(d=1\))

h = sp.Symbol('h')

l = Lagrangebasis([-1, 1])

ae = lambda r, s: sp.integrate(l[r]*l[s], (x, -1, 1))

A1e = h/2*sp.Matrix([[ae(0, 0), ae(0, 1)],[ae(1, 0), ae(1, 1)]])

A1e\(\displaystyle \left[\begin{matrix}\frac{h}{3} & \frac{h}{6}\\\frac{h}{6} & \frac{h}{3}\end{matrix}\right]\)

Quadratic (\(d=2\))

l = Lagrangebasis([-1, 0, 1])

ae = lambda r, s: sp.integrate(l[r]*l[s], (x, -1, 1))

A2e = h/2*sp.Matrix(np.array([[ae(i, j) for i in range(3) for j in range(3)]]).reshape(3, 3))

A2e\(\displaystyle \left[\begin{matrix}\frac{2 h}{15} & \frac{h}{15} & - \frac{h}{30}\\\frac{h}{15} & \frac{8 h}{15} & \frac{h}{15}\\- \frac{h}{30} & \frac{h}{15} & \frac{2 h}{15}\end{matrix}\right]\)

Complete assembly implementation

Ae = [A1e, A2e] # previously computed

def get_element_boundaries(xj, e, d=1):

return xj[d*e], xj[d*(e+1)]

def get_element_length(xj, e, d=1):

xL, xR = get_element_boundaries(xj, e, d=d)

return xR-xL

def local_to_global_map(e, r=None, d=1): # q(e, r)

if r is None:

return slice(d*e, d*(e+1)+1)

return d*e+r

def assemble_mass(xj, d=1):

N = len(xj)-1

Ne = N//d

A = np.zeros((N+1, N+1))

for elem in range(Ne):

hj = get_element_length(xj, elem, d=d)

s0 = local_to_global_map(elem, d=d)

A[s0, s0] += np.array(Ae[d-1].subs(h, hj), dtype=float)

return AHigher order mass matrix

[[ 0.0333 0.0167 -0.0083 0. 0. 0. 0. 0. 0. ]

[ 0.0167 0.1333 0.0167 0. 0. 0. 0. 0. 0. ]

[-0.0083 0.0167 0.0667 0.0167 -0.0083 0. 0. 0. 0. ]

[ 0. 0. 0.0167 0.1333 0.0167 0. 0. 0. 0. ]

[ 0. 0. -0.0083 0.0167 0.0667 0.0167 -0.0083 0. 0. ]

[ 0. 0. 0. 0. 0.0167 0.1333 0.0167 0. 0. ]

[ 0. 0. 0. 0. -0.0083 0.0167 0.0667 0.0167 -0.0083]

[ 0. 0. 0. 0. 0. 0. 0.0167 0.1333 0.0167]

[ 0. 0. 0. 0. 0. 0. -0.0083 0.0167 0.0333]]Sparsity pattern:

Note

- The internal nodes represent rows with only 3 nonzero items. The nodes on the boundary between two elements have rows containing 5 nonzero items.

- The mass matrix is not diagonal, but it is sparse.

Finite element assembly of a vector

In solving for

\[ \sum_{j=0}^N(\psi_j, \psi_i) \hat{u}_j = (u, \psi_i), \quad i=0,1,\ldots, N \]

we also need the right hand side

\[ b_i = (u, \psi_i), \quad i = 0, 1, \ldots, N \]

This inner product can also be evaluated elementwise, and mapped just like the mass matrix. We define the element vector similarly as the element matrix

\[ b_i^{(e)} = \int_{\Omega^{(e)}} u(x) \psi_{i}(x) dx, \quad i = 0, 1, \ldots, N \]

\(b_i^{(e)}\) will be highly sparse.

Define a dense local vector

\[ \tilde{b}^{(e)}_r = (u, \psi_{q(e, r)}) = \int_{\Omega^{(e)}} u(x) \psi_{q(e, r)}(x) dx, \quad r = 0, 1, \ldots, d \]

Using as before \(\psi_{q(e, r)}(x)=\ell_r(X)\) we get a mapping to the reference domain

\[ \tilde{b}^{(e)}_r = \frac{h(e)}{2}\int_{-1}^1 u(x(X)) \ell_{r}(X) dX, \quad r = 0, 1, \ldots, d \]

Note

The vector \(\boldsymbol{\tilde{b}}^{(e)}\) needs to be assembled with an integral for each element because of \(u(x(X))\)

Assemble by adding up for all elements \(e=0,1, \ldots, N_e-1\) and \(r=0,1, \ldots, d\)

\[ b_{q(e, r)} \mathrel{+}= \tilde{b}_r^{(e)} \]

Implementation \(\small \boldsymbol{b}^{(e)}\)

def map_true_domain(xj, e, d=1, x=x): # return x(X)

xL, xR = get_element_boundaries(xj, e, d=d)

hj = get_element_length(xj, e, d=d)

return (xL+xR)/2+hj*x/2

def map_reference_domain(xj, e, d=1, x=x): # return X(x)

xL, xR = get_element_boundaries(xj, e, d=d)

hj = get_element_length(xj, e, d=d)

return (2*x-(xL+xR))/hj

def map_u_true_domain(u, xj, e, d=1, x=x): # return u(x(X))

return u.subs(x, map_true_domain(xj, e, d=d, x=x))

def assemble_b(u, xj, d=1):

l = Lagrangebasis(np.linspace(-1, 1, d+1), sympy=False)

N = len(xj)-1

Ne = N//d

b = np.zeros(N+1)

for elem in range(Ne):

hj = get_element_length(xj, elem, d=d)

us = sp.lambdify(x, map_u_true_domain(u, xj, elem, d=d))

integ = lambda xj, r: us(xj)*l[r](xj)

for r in range(d+1):

b[local_to_global_map(elem, r, d)] += hj/2*quad(integ, -1, 1, args=(r,))[0]

return bNote

We need to perform an integral by calling quad for each \(r\) in each element.

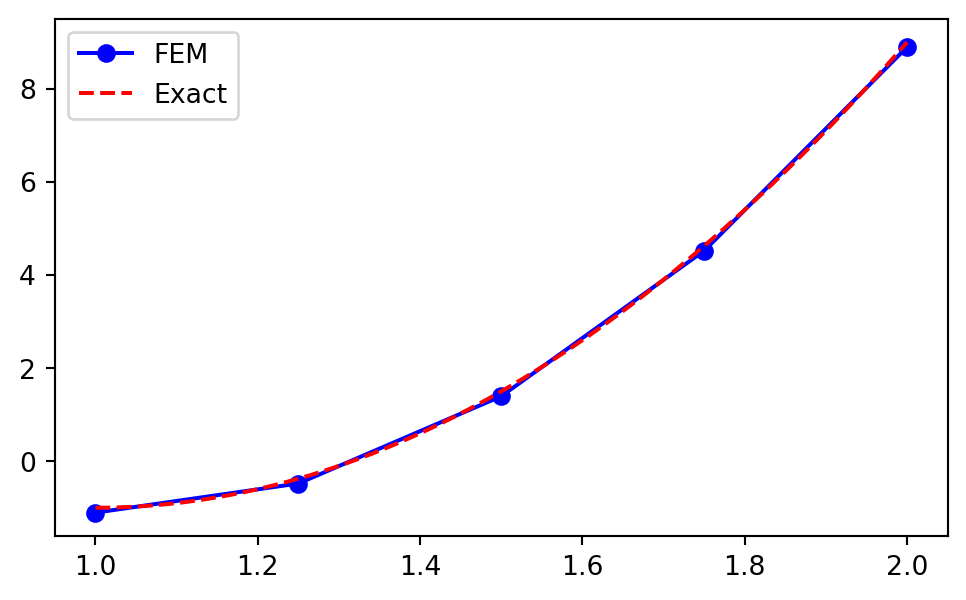

Example: \(\small u(x) = 10(x-1)^2-1, x \in [1, 2]\)

Use the previously implemented assemble_mass and assemble_b to find the approximation of \(u(x)\) using piecewise linear functions and FEM:

def assemble(u, N, domain=(-1, 1), d=1, xj=None):

mesh = np.linspace(domain[0], domain[1], N+1) if xj is None else xj

A = assemble_mass(mesh, d=d)

b = assemble_b(u, mesh, d=d)

return A, b

N = 4

xj = np.linspace(1, 2, N+1)

A, b = assemble(10*(x-1)**2-1, N, d=1, xj=xj)

uh = np.linalg.inv(A) @ b

yj = np.linspace(1, 2, 1000)

plt.figure(figsize=(6, 3.5))

plt.plot(xj, uh, 'b-o', yj, 10*(yj-1)**2-1, 'r--')

plt.legend(['FEM', 'Exact']);

Note

- Since we are using piecewise linear polynomials we can simply plot

uh= \((u_N(x_i))_{i=0}^N=(\hat{u}_i)_{i=0}^N\) andmatplotlibwill correctly fill in a linear profile between the points. - The FEM solution \(u_N(x_i) \ne u(x_i)\)

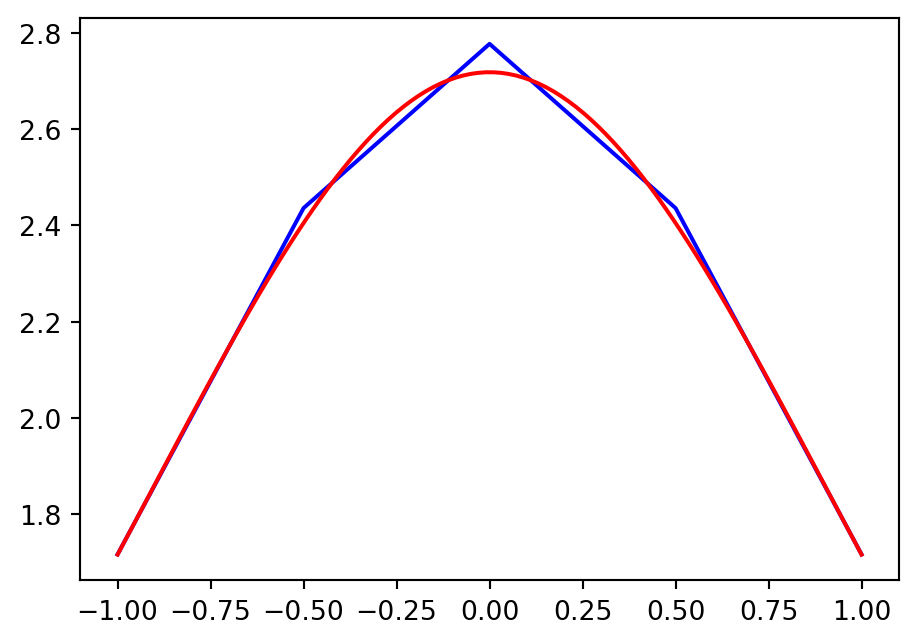

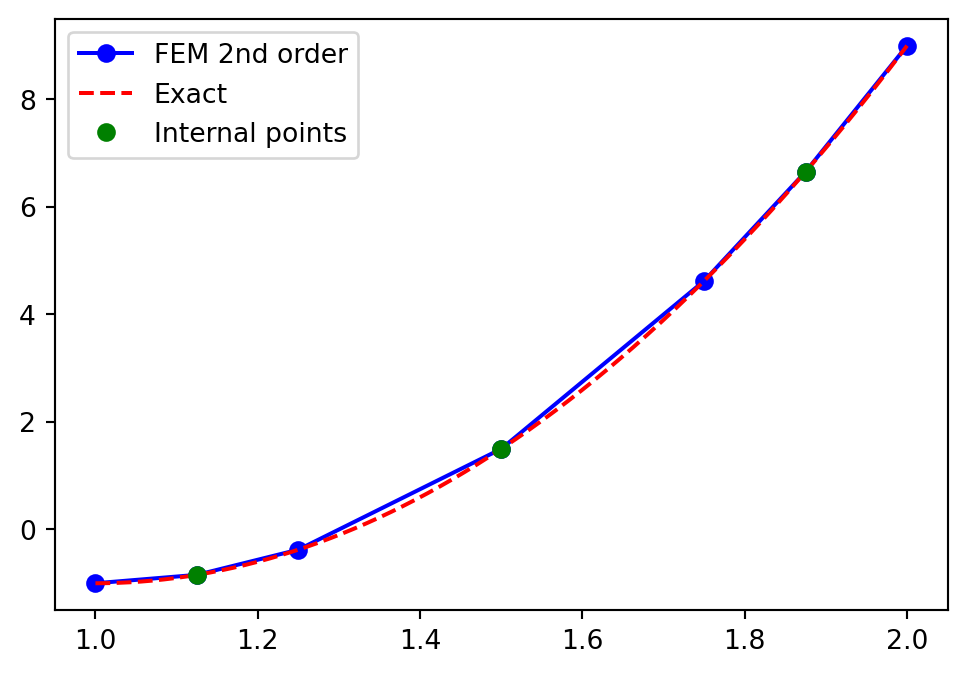

Second order \((d=2)\)

Check that a non-uniform mesh works as well:

\[ x_{2i} = 1+(\cos(2 \pi i / N) + 1)/2 \quad \text{and} \quad x_{2i+1} = \frac{x_{2i}+x_{2(i+1)}}{2} \]

N = 6

xj = np.zeros(N+1)

xj[::2] = 1 + (np.cos(np.arange(N//2+1)*np.pi*2/N)[::-1] + 1)/2

xj[1::2] = 0.5*(xj[:-1:2]+xj[2::2])

A, b = assemble(10*(x-1)**2-1, N, d=2, xj=xj)

uh = np.linalg.inv(A) @ b

yj = np.linspace(1, 2, 1000)

plt.figure(figsize=(6, 4))

plt.plot(xj, uh, '-bo', yj, 10*(yj-1)**2-1, 'r--')

plt.plot(xj[1::2], uh[1::2], 'go')

plt.legend(['FEM 2nd order', 'Exact', 'Internal points']);

Why still linear interpolation? We need to use the higher order \(u_N(x) = \sum_{j=0}^N\hat{u}_j \psi_j(x)\) between mesh points! \(\rightarrow\) FEM evaluation

Finite element evaluation

The finite element solution differs from the finite difference solution in that the solution is automatically defined everywhere within the domain.

\[ u_N(x) = \sum_{j=0}^N\hat{u}_j \psi_j(x) \]

However, most basis functions will be zero at any location \(x\). We need to find which element \(x\) belongs to! And then evaluate only with non-zero basisfunctions

\[ u_N(x) = \sum_{r=0}^d \hat{u}_{q(e, r)} \ell_{r}(X), \quad x \in \Omega^{(e)} \]

def fe_evaluate(uh, p, xj, d=1):

l = Lagrangebasis(np.linspace(-1, 1, d+1), sympy=False)

elem = max(0, np.argmax(p <= xj[::d])-1) # find element containing p

Xx = map_reference_domain(xj, elem, d=d, x=p)

return Lagrangefunction(uh[d*elem:d*(elem+1)+1], l)(Xx)

fe_evaluate(uh, 1.2, xj, d=2), 10*(1.2-1)**2-1(-0.600000000000000, -0.6000000000000002)Evaluate FEM for \(N_d\) points

\[ u_N(x_i) = \sum_{r=0}^d \hat{u}_{q(e, r)} \ell_{r}(X(x_i)), \quad x \in \Omega^{(e)}, \quad i=0, 1, \ldots, N_d-1 \]

Just loop over scalar code for each point

Alternatively, use vectorization, but not really straightforward:

def fe_evaluate_v(uh, pv, xj, d=1):

l = Lagrangebasis(np.linspace(-1, 1, d+1), sympy=False)

# Find points inside elements

elem = (np.argmax((pv <= xj[::d, None]), axis=0)-1).clip(min=0)

xL = xj[:-1:d] # All left element boundaries

xR = xj[d::d] # All right element boundaries

xm = (xL+xR)/2 # middle of all elements

hj = (xR-xL) # length of all elements

Xx = 2*(pv-xm[elem])/hj[elem] # map pv to reference space all elements

dofs = np.array([uh[e*d+np.arange(d+1)] for e in elem], dtype=float)

V = np.array([lr(Xx) for lr in l], dtype=float) # All basis functions evaluated for all points

return np.sum(dofs * V.T, axis=1)More difficult example: \(\small u(x)=e^{\cos x}\)

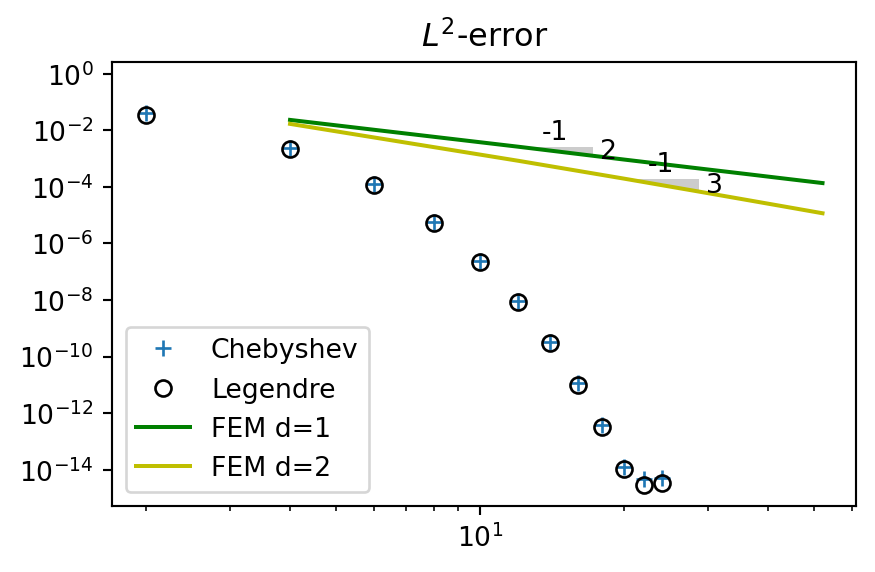

Compute \(L^2(\Omega)\) error and compare with global Chebyshev and Legendre methods

def L2_error(uh, ue, xj, d=1):

yj = np.linspace(-1, 1, 4*len(xj))

uhj = fe_evaluate_v(uh, yj, xj, d=d)

uej = ue(yj)

return np.sqrt(np.trapz((uhj-uej)**2, dx=yj[1]-yj[0]))

u = sp.exp(sp.cos(x))

ue = sp.lambdify(x, u)

err = []

err2 = []

for n in range(2, 30, 4):

N = 2*n

xj = np.linspace(-1, 1, N+1)

A, b = assemble(u, N, (-1, 1), 1)

uh = np.linalg.inv(A) @ b

A2, b2 = assemble(u, N, (-1, 1), 2)

uh2 = np.linalg.inv(A2) @ b2

err.append(L2_error(uh, ue, xj, 1))

err2.append(L2_error(uh2, ue, xj, 2))

Note

This illustrates nicely spectral versus finite order accuracy. With \(d=1\) the FEM obtains second order accuracy and the error disappears as the linear (in the loglog-plot) green curve with slope \(-2\) (from error \(\sim N^{-2}\)). The spectral error on the other hand disappears exponentially as \(\sim e^{-\mu N}\), faster than any finite order.

- Finite element software

- Developed originally at Chalmers University of Technology and UiO

- Very flexible and easy to use

- Solves PDEs with many different finite elements, including Lagrange

For installation: https://github.com/FEniCS/dolfinx

Anaconda

Linux

Docker

First example - function approximation using piecewise linear Lagrange elements

from mpi4py import MPI

from dolfinx import mesh, fem, cpp

from dolfinx.fem.petsc import LinearProblem

import ufl

from ufl import dx, inner

msh = mesh.create_interval(MPI.COMM_SELF, 4, (-1, 1))

V = fem.functionspace(msh, ("Lagrange", 1))

u = ufl.TrialFunction(V)

v = ufl.TestFunction(V)

xp = ufl.SpatialCoordinate(msh)

ue = ufl.exp(ufl.cos(xp[0]))

a = inner(u, v) * dx

L = inner(ue, v) * dx

problem = LinearProblem(a, L)

uh = problem.solve()Result 4 piecewise linear basis functions

Exactly the same result for the same method

FEniCS uses exactly the same method with piecewise linear basis functions as we have described using Sympy/Numpy and as such we get exactly the same matrix/vectors:

FEniCS

array([[0.1667, 0.0833, 0. , 0. , 0. ],

[0.0833, 0.3333, 0.0833, 0. , 0. ],

[0. , 0.0833, 0.3333, 0.0833, 0. ],

[0. , 0. , 0.0833, 0.3333, 0.0833],

[0. , 0. , 0. , 0.0833, 0.1667]])array([0.4892, 1.1865, 1.3317, 1.1865, 0.4892])array([1.7169, 2.4361, 2.7772, 2.4361, 1.7169])Summary

- The finite element method (FEM) is a variational method using local basis functions.

- The FEM uses the same Galerkin method as the methods using global basis functions.

- The FEM is assembled by running over all elements and assembling local matrices and vectors that are subsequently added to global matrices and vectors.

- Since all assembly work is performed elementwise, the FEM is very well suited for unstructured meshes.